Good morning, and happy December. Since we’re starting with a launch story today, we’ll keep the space theme going up here.

Right now, Voyager 1 is less than a year away from being a full light-day from Earth — 16.1B miles from the Blue Marble. The spacecraft was built to last five years, but it has lasted nearly 50. It survived its Jupiter and Saturn flybys, entered interstellar space in 2012, and it just kept going. When the spacecraft’s memory recently corrupted, NASA engineers debugged code from 1977 in a 15B-mile, high-stakes surgery with a two-day communications lag — and brought it back to life.

Voyager reminds us that we’ve built things that went faster, lasted longer, and cost less than anyone thought possible. And we can do it again.

IN THIS WEEK’S EDITION:

🚀 The state of global upmass

🔦 A song of silicon and light

🧠 Brain-computer interfaces, plus: Reader Poll

Forwarded this email? Subscribe to Per Aspera here.

The State of Global Upmass

The story of upmass over the last five years has been simple: There is SpaceX, and then there is everyone else.

Upmass, for the uninitiated, is payload mass to orbit, e.g. the total weight of what we lift to space ex rockets. It’s one of the vital biomarkers of a healthy, robust space economy.

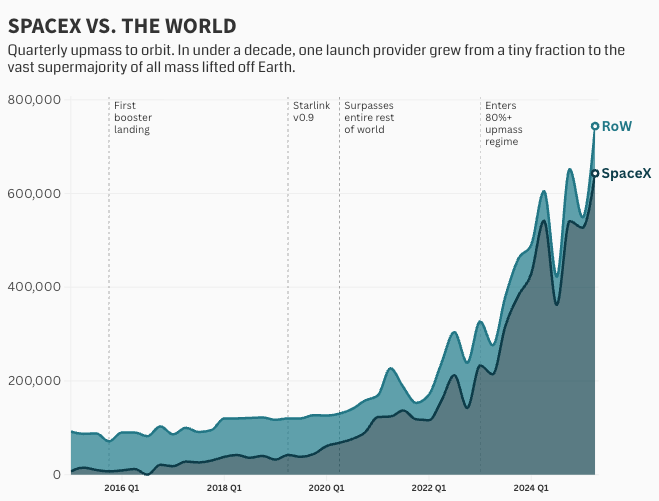

In the third quarter, SpaceX was responsible for 83% of all mass launched to orbit globally, and 97% of American upmass. Since capturing the lion’s share in 2020, SpaceX hasn’t looked back:

Three key trends to watch, all driven by reusable rocketry:

001 // The Starship program. Paradoxically, SpaceX’s biggest threat may very well be…SpaceX. If Starship hits its reliability, cadence, and reuse targets, it will displace Falcon and potentially run up SpaceX’s share into the 90-95%+ range.

Starship currently carries 100-150 metric tons to LEO, with the planned V3 variant projected to exceed 200. For reference, the Falcon 9 tops out at ~17 tons.

002 // New Glenn’s ramp. With its NG-2 mission last month, Blue Origin became the second in history to land an orbital-class rocket when it recovered its booster on the Jacklyn droneship at sea. Blue has announced upgrades rolling into NG-3 (first-stage thrust 3.9M → 4.5M lbf, upper-stage thrust +25%, reusable fairing, enhanced TPS), plus a super-heavy variant: NG 9×4.

New Glenn 9×4, with nine BE-4s on the booster and four BE-3Us up top, will be capable of lifting ~70 metric tons to LEO. Source: Blue CEO Dave Limp.

Starlink was a key catalyst for SpaceX, giving the company a captive customer that allowed it to ramp launch cadence and upmass on its own terms. New Glenn has its own captive customer in Amazon Leo, the artist formerly known as Project Kuiper, plus a dozen-plus missions targeted for next year and a packed manifest of external customers (including NASA and NSSL certification flights).

003 // Chinese launchers waiting in the wings: Three Chinese rockets, all designed for first-stage recovery, are currently lined up at the Jiuquan Satellite Launch Center in northwest China. Each was expected to debut before year-end, but that’s looking less likely now. LandSpace scrubbed the promising Zhuque-3’s maiden launch for unknown reasons, with apparently substantial delays. Space Pioneer’s Tianlong-3 is on the pad but, rather notably, lacks landing hardware. We’ve seen murmurs online that SAST, the state-owned contractor, is leveraging its influence with the Party to push for its rocket, the Long March 12A, “to go first.”

The state-owned CZ-12A, LandSpace’s ZQ-3, & Space Pioneer’s TL-3 (source)

Rocket | Developer | Payload (kg) |

Zhuque-3 | LandSpace | 11,800 kg |

Tianlong-3 | Space Pioneer | 17,000 kg |

Long March 12A | SAST | 12,000 kg |

It’s likely that one of these developers will eventually succeed, and become the first non-American entity to propulsively land an orbital-class booster. This would mark the beginning of a two-front challenge to SpaceX’s dominance: Blue from the West, and a rapidly maturing Chinese launch sector from the East.

Per Aspera’s Takeaway: The U.S. faces a manufacturing and hardware development deficit across many strategic technology domains. Fortunately, this does not include rockets (see chart above) and satellites (~70% of active birds are American), thanks in massive part to a singular firm: SpaceX. But competition is a good thing, and with a pacing challenger in the East, now is not the time to take our foot off the gas. The world is already accelerating: in November, humanity flew 31 orbital missions, marking the first time in history we launched more rockets than there were days in the month.

A Song of Silicon and Light

A decade ago, the House of Google made a strategic bet. Across the Kingdom — Search, Maps, Photos, Translate, and early neural nets — leaders saw two things: (1) torrents of matrix math that conventional chips struggled to handle, and (2) a shift from general-purpose compute toward something heavier and spikier. The lords convened in Mountain View and decided that the time had come to forge their own custom silicon. The Tensor Processing Unit (TPU) program launched without much fanfare, with TPUv1 chips entering production circa 2015.

A decade later, all eyes are now on the TPU. The latest generation, Ironwood (TPUv7), is designed for frontier inference, boasts major performance and efficiency gains, introduces a new 3D topology, sizes up the superpod, and, for the first time, is being marketed and sold to other realms. With Gemini 3 trained on Ironwood, $GOOG at all-time highs, and a defensive House of Nvidia issuing cagey, cringey social media posts, the AI Compute War has a new front.

But something’s missing from the story…how are all of these chips linked?

Doing anything useful with AI today requires delicate orchestration. Thousands of chips must work in concert, passing data back and forth constantly. As scaling laws demand larger clusters, chips stack into racks, racks scale into superpods, and superpods stretch across the datacenter. What’s often lost in chip-level discourse is that moving data (communication) matters as much as doing math (compute).

Copper wiring has been the default interconnect (communication layer) in datacenters for decades. It is cheap, reliable, and effective across short distances. But as clusters grow, copper starts fighting you (signal degradation, heat generation, etc) and requires more power. At frontier scale, you might eventually end up spending as much on movement as math.

Photonics is seen as the heir apparent. Where copper carries electrical signals, optical systems encode data in photons that travel through fiber or bounce off tiny mirrors. These pulses of light move with very low loss, minimal heat, and stable timing over distance, precisely the properties that copper lacks at scale.

Critically, House of Google parlayed its TPU bet with a second wager: Mission Apollo, a massive, multi-$B investment into the dark magic of using light to move data. Since then, the hyperscaler has deployed tens of thousands of custom optical circuit switches (OCS) across its datacenters. Copper still handles local links, but the long roads increasingly now run on light.

The parlay paid out.

Google claims 30% higher throughput, 40% lower power consumption, and 70% lower capex for OCS vs. electrical switching. Both Houses (Google and Nvidia) recognize optical as essential.

But Google architected its entire fabric around optical switching, creating a flatter network that directly connects 9,216 TPUs in a single superpod — and up to 147,000 TPUs at the datacenter level.

Nvidia is optimizing their existing multi-layer architecture with advanced optics, which adds cost and complexity compared to Google's unified approach.

SemiAnalysis recently estimated Ironwood’s total cost of ownership (TCO) as being 44% lower than Nvidia’s comparable system (TCO = silicon, networking, power, cooling, etc). Even when Google adds margin to sell TPU externally, customers could see 30-40% lower TCO.

A Tale Of Two Kingdoms, with Google-exposed names vs. OpenAI ecosystem players. But this is a mere moment in time, and these curves can and likely will cross again, many times, given how quickly the AI narrative shapeshifts. Case in point: Coatue, the creator of this chart, didn’t even include Google in its “Fantastic 40” AI companies a few months ago!

Other fiefdoms are taking notice and lining up at Google’s gates. The Dynasty of Dario (Anthropic) has pledged tens of billions of dollars for up to 1M TPUs (and 1+ GW of capacity in 2026), while the Monarchy of Mark Zuckerberg is also reportedly in alliance talks.

The House of Google, once a search empire with a stable of side projects, has lived to see the day where many of its Other Bets are maturing into indispensable parts of the armory. From custom silicon to optical interconnects, a decade of vertical integration is compounding into a formidable full-stack advantage.

Go Deeper…

Per Aspera won’t miss a chance to point out the interplay between physics and unit economics. And here’s as good an example as any, where the physics of light (lower power, less heat, longer reach) can flow through to the bottom line. This summer, we published a 7,500-word Antimemo on photonics and predicted that an inflection point like this exact moment would arrive. So, set aside some time to read the full writeup on why photons will power the cognitive internet

Paradromics has received FDA clearance to begin human trials of its Connexus brain-computer interface (BCI), making it one of the few U.S. companies — alongside Neuralink and Synchron — to advance a high-bandwidth, fully implantable cortical device into regulated human testing. The trial is small, focused on restoring communication for people with severe paralysis. But the clearance is notable, confirming that the FDA is willing to evaluate and approve more than a single pathway for invasive, high-channel BCI systems (thereby significantly reducing regulatory risk for the nascent sector).

A brief history: BCI technology was always seen as theoretically sound (direct neural recording beats any external sensor) but practically constrained: FDA processes were opaque, signal processing was crude, and talent was clustered in universities. But three things are simultaneously shifting: (1) the FDA now has a playbook for approving invasive neural devices, (2) top neuroscientists are exiting academia to start/join VC-backed startups, and (3) AI breakthroughs in neural signal decoding have made the software stack more tractable.

These tailwinds explain why we’ve seen venture funding into neurotech ~double over the last three years. Most notably, Neuralink raised $650M in June, and over in the Sam Altman cinematic universe, the OpenAI CEO is reportedly a cofounder of Merge Labs, a BCI startup in the process of raising a $250M seed round. (Inflation has gotten out of control!)

It’s obvious what comes next…of course, we won’t miss the chance to conduct preliminary market research and poll y’all on brain-computer interfaces.

Extra Renaissance Rumblings

Golden Dome & Iron Dome…meet the Michelangelo Dome 🤌 // EU boosts space budget by 30% // U.S. Navy cancels Constellation-class frigate program to instead pursue smaller, faster-to-field surface ships // Ireland’s small navy and limited intelligence sharing labeled a liability for critical transatlantic cables // China’s new tailless J-36 aircraft use thrust vectoring nozzles to steer // Fleet Space finds “massive” lithium deposit in Quebec using its multi-sensor constellation // MFG news & megaprojects: Micron pledges $9.6B for a new HBM facility in Hiroshima to feed the AI supply chain; Toyota tops up U.S. investment plan with an additional $10B; Nokia commits $4B for U.S. R&D/mfg for “AI-ready” networks; AstraZeneca invests $2B in Maryland for biologics manufacturing // Research: Duke Health is piloting defibrillator drones // Integrated photonic mm-wave radar chip developed for 6G networks.